Can the digital whispers of the internet, those cryptic arrangements of characters, ever truly be deciphered and restored to their original, intended form? The answer, surprisingly, is often yes, thanks to a combination of understanding encoding standards and applying the right tools to the task.

The digital world, a vast landscape of information exchange, relies on a set of rules, a universal language if you will, to ensure that what is sent is what is received. But like any language, misunderstandings and errors can occur. One of the most frustrating of these is the garbled text we know as mojibake or, more colloquially, online garbled characters. This phenomenon arises from a mismatch between the encoding used to store or transmit text and the encoding used to interpret it. Common causes include incorrect character set declarations in web pages, email clients misinterpreting data, and even corrupted data files. Understanding the source of the problem is the first step towards finding a solution.

The challenge lies in the fact that computers store text as numerical representations. These numbers are then mapped to characters based on encoding schemes. Two of the most prevalent schemes are UTF-8 and ISO-8859-1 (often referred to as Latin-1). UTF-8, a variable-width encoding, is the dominant character encoding for the World Wide Web. It can represent almost every character in the world, making it incredibly versatile. ISO-8859-1, on the other hand, is a single-byte encoding that covers most of the Western European languages. When a text encoded in UTF-8 is interpreted as if it were in ISO-8859-1, or vice versa, the characters are misread, and the dreaded garbled text appears.

Consider the example of the character ç. In ISO-8859-1, this character is represented by the number 231. In UTF-8, however, the same character might be represented by a two-byte sequence, such as 00e7. If a program reads the UTF-8 sequence as ISO-8859-1, it will interpret the bytes incorrectly, leading to what appears as a meaningless string of characters. This explains why a word, encoded correctly, might appear as a sequence of symbols that make no sense, or even, worse, as entirely different, unexpected characters. The resulting text is often unreadable, making the original information inaccessible.

Fortunately, a range of tools and techniques exist to tackle this problem. Online decoders are readily available, allowing users to input the garbled text and attempt to convert it back to its original state. These tools typically allow the user to specify the source and target encodings, such as UTF-8, GBK (a common Chinese encoding), or ISO-8859-1. Another approach is to use programming languages or text editors to perform the conversion manually. Many text editors offer the option to select the encoding used to open and save a file, allowing users to experiment with different encodings until the text displays correctly. These tools are often used to analyze the text and to understand which encoding was used when writing and which one to apply for readability.

The recovery process can sometimes be complicated by the nature of the garbled text itself. If the text has been corrupted during multiple encoding conversions, or if the original encoding is unknown, the process can become more challenging. However, even in complex cases, it is often possible to recover at least some of the original text. When dealing with documents in languages like Polish, which use diacritics for the special polish phonemes (such as ą and ł), the correct handling of encoding becomes extremely important to avoid any loss of information. The Polish alphabet, consisting of 32 letters, uses Latin Script with these diacritics. The placement of stress in Polish words also helps, usually falling on the second-to-last syllable.

Additionally, the use of Unicode/UTF-8 character tables can be invaluable. These tables provide a comprehensive list of characters and their corresponding numerical representations in Unicode. By examining the garbled text and comparing its characters to the characters in the Unicode table, it is sometimes possible to identify the correct encoding. For example, if the garbled text contains many characters that are not present in ISO-8859-1 but are present in UTF-8, it is likely that the original text was encoded in UTF-8, and that the current interpretation is incorrect. One such example is the inclusion of the character é which is represented by 233 in ISO-8859-1 and the character ç which is represented by 231 in ISO-8859-1, are good starting points.

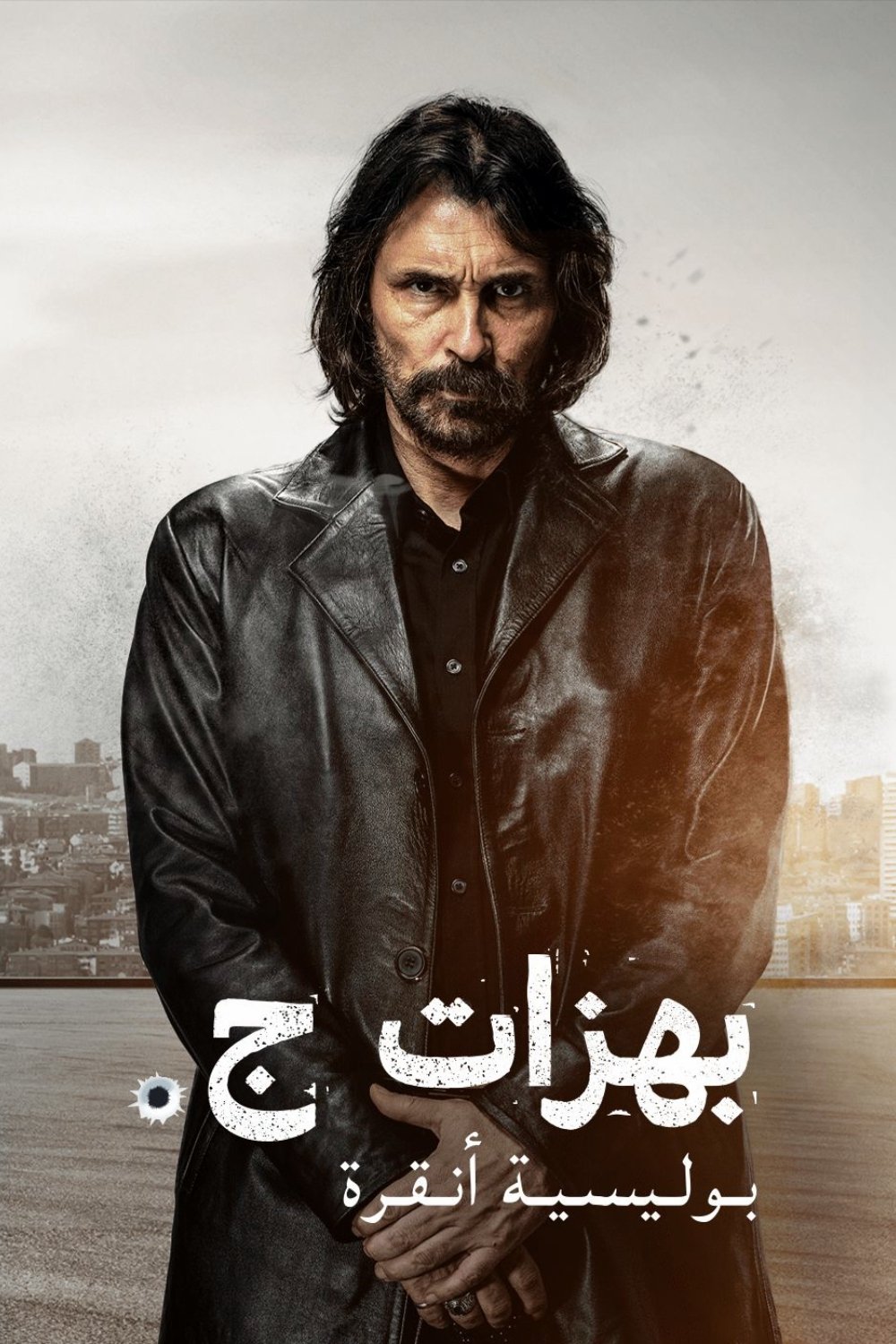

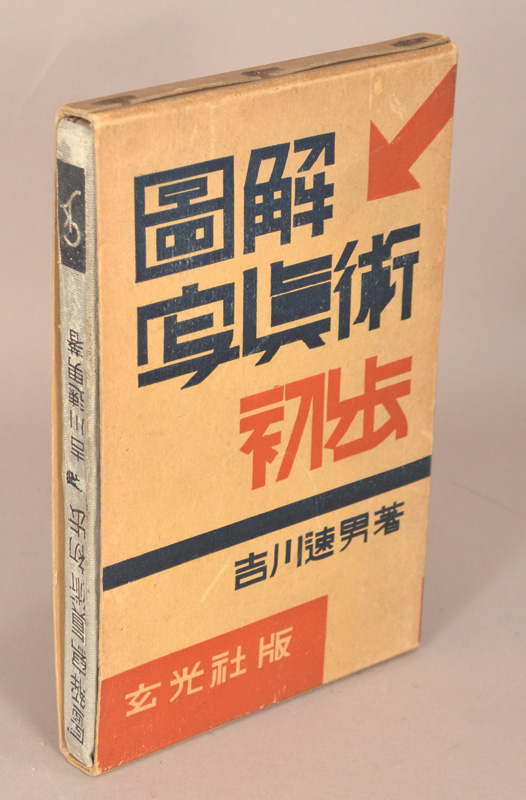

Moreover, specific cases have been observed where the garbled text itself may provide clues about the original encoding. For instance, when text encoded in UTF-8 is read as if it were in ISO-8859-1, the result often features a pattern of characters. One instance of this is seen when reading Chinese characters encoded in UTF-8 as if they were in GBK; this leads to specific characters being interpreted incorrectly. Such instances provide a valuable starting point for the recovery process.

Furthermore, understanding the context of the text is also crucial. Knowing the language, the subject matter, or the origin of the text can help to identify the correct encoding. If the text is known to be in a specific language, it is often possible to narrow down the range of possible encodings. For example, if the text is in French, the most likely encodings are UTF-8 and ISO-8859-1. The context helps to solve the mystery of the garbled text.

Therefore, when encountering garbled text online, the first step is to determine the source of the problem. Was the text copied from a different source? Was it created in a specific software? Once the source is known, it is easier to troubleshoot the encoding. By using online decoders, programming languages, character tables, and a deep understanding of how encoding works, the digital whispers of the internet can be restored to their original form, allowing us to understand the message the author was attempting to convey.

As the internet becomes increasingly globalized, and more data is exchanged between different systems, the problem of garbled text will only increase. However, by understanding the fundamentals of character encoding and by utilizing the available tools, we can continue to make the digital world a more readable, understandable, and accessible place for all.